Problem

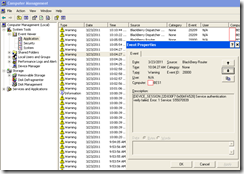

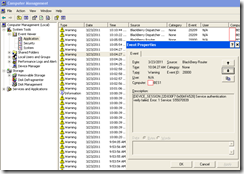

For those who have come across my previous post on an Exchange issue, after getting mail flow going and the Exchange server’s event logs cleared of warnings and errors, the next issue I had to tackle on was the BES server. Even though the storage administrator restored the BES server that was a month old and rejoined it to the domain, none of the users with Blackberries were able to send or receive mail messages. Reviewing the application logs in the event viewer, I can see there are there are event IDs: 20209, 20000, 20590 warnings consistently logged:

The warnings show the following messages:

Event ID: 20154

User someFirstName someLastName not started.

Event ID: 20000

[DEVICE_SRP:somePIN:0x003B4B90] Receive_UNKNOWN, VERSION=2, CMD=241

Event ID: 20000

[DEVICE_SESSION:somePIN:0x00B391F0] Timer Event. Exceeded service authentication timeout. No authenticated services. Releasing session.

Event ID: 20590

{someUser} BBR Authentication failed! Error=1

Event ID: 20000

[DEVICE_SESSION:somePIN:0x00AFA528] Service authentication verify failed. Error: 1 Service: S55070939

Event ID: 20209

{SomeFirstName someLastName} DecryptDecompress() failed, Tag=7976793, Error=604

The error above repeats for a few users until an information event gets logged indicating:

Event ID: 50079

8 user(s) failed to initialize

Solution

So how did I fix this? The solution was actually quite simple although there was some manual labour involved. What needed to be done was to log into the BAS (BlackBerry Administration Service) and choose Resend service books to device for the user:

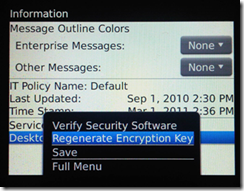

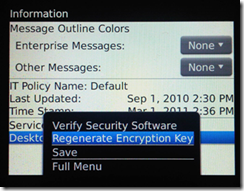

Once that’s done, take the user’s Blackberry and regenerate the encryption key. With the new Torch Blackberry OS available, the menus may differ so I’ll include pictures of the screen for the 2 OS I had to work with:

Blackberry OS 5:

Options –> Security Options –> Information –> Desktop –> Regenerate Encryption Key.

Once you select the Regenerate Encryption Key option, you navigate to the enterprise activation screen and you will see the synchronization process begin:

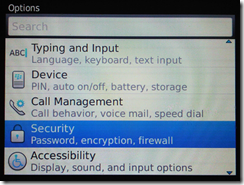

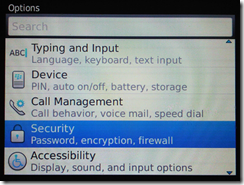

Blackberry Torch OS:

Options –> Security –> Security Status Information –> Desktop –> Regenerate Encryption Key.

Hope this helps anyone that may run into a similar problem.