One of the most common questions I’ve been asked in the past when it comes to cost management in Azure is what are the options for powering off and on virtual machines based on a schedule. A quick Google search on the internet for this would return a mix of Azure Automation Accounts, the Auto-Shutdown feature blade within the VM (only powers off but not power on), Logic Apps, the new Automation Tasks, and Azure Functions. Each of these options have its advantages and disadvantages, and the associated cost to execute them. Few of them have limitations on the capabilities available for this type of automation. As much as I like Logic Apps because of it’s visual and little to no code capabilities, I find it a bit cumbersome to configure each step via a GUI and the flow of the Logic App can quickly become difficult to follow when there are multiple branches of conditions. My preference with most automation are Function Apps because it allows me to write code to perform anything I needed. With the above described, it’s probably not a surprise that this post is going to demonstrate this type of automation with an Azure Function App.

The scenario I want to provide is an ask from a client who wanted the following:

- Auto Start virtual machines at a certain time

- Auto Deallocate virtual machines at a certain time

- Capability to set start and deallocate schedules for weekdays and weekends

- Capability to indicate virtual machines should either be powered on or deallocated over the weekend (they had some workloads that did not need to be on during the week but had to be on over the weekend

- Lastly, and the most important, they wanted to use Tags to define the schedule because they use Azure Policies to enforce tagging

There are plenty of scripts available on the internet that provides most of the functionality but I could not find one that allowed the type of control over the weekend so I spent some time to write one.

Before I begin, I would like to explain that the script I wrote uses the Azure Resource Graph to query the status of the virtual machines, their resource groups, and their tags because I find the time it takes for ARM to interact with the resource providers can take a very long time as compared to interacting with the Resource Graph, which is much faster. Those who have used the Resource Graph Explorer in the Azure portal will recognize the KQL query I used to retrieve information. What’s great about this approach is that we can test the query directly in the portal:

The design of the tagging for the virtual machines to control the power on, deallocate, and scheduling are as follows:

| Tag | Value | Example | Purpose | Behavior |

| WD-AutoStart | Time in 24 hour format | 08:00 | Defines the start of the time when the VM should be powered on during the weekday | This condition is met if the time is equal or past the value for Monday to Friday |

| WD-AutoDeallocate | Time in 24 hour format | 17:00 | Defines the start of the time when the VM should be powered off during the weekday | This condition is met if the time is equal or past the value for Monday to Friday |

| WD-AutoStart | Time in 24 hour format | 09:00 | Defines the start of the time when the VM should be powered on during the weekend | This condition is met if the time is equal or past the value for Saturday and Sunday |

| WD-AutoDeallocate | Time in 24 hour format | 15:00 | Defines the start of the time when the VM should be powered off during the weekend | This condition is met if the time is equal or past the value for Saturday and Sunday |

| Weekend | On or Off | On | Defines whether the VM should be on or off over the weekend | This condition should be set if a weekday schedule is configured and the VM needs to be on as it is the condition to turn the VM back on after the power off on a Friday |

The following is an example of a virtual machine with tags applied:

With the explanation out of the way, let’s get started with the configuration.

Step #1 – Create Function App

Begin by creating a Function App with the Runtime stack PowerShell Core version 7.2. The hosting option can either be consumption, premium, or App Service Plan but for this example, we’ll use consumption:

Proceed to configure the rest of the properties of the Function App:

I always recommend turning on Application Insights whenever possible as it helps with debugging but it is not necessary:

You can integration the Function App with a GitHub account for CI/CD but for this example we won’t be enabling it:

Proceed to create the Function App:

Step #2 – Turn on System Assigned Managed Identity and Assign Permissions

To avoid managing certificates and secrets, and enhance the security posture of your Azure environment, it is recommended to use managed identities wherever possible so proceed to turn on the System assigned managed identity in the Identity blade of the Function App so an Enterprise Application object is created in Azure AD / Entra ID, which we can then use to assign permissions to the resources in the subscription:

You’ll see an Object (principal) ID created for the Function App after successfully turning on the System assigned identity:

Browsing to the Enterprise Applications in Entra ID will display the identity of the Function App:

With the system managed identity created for the Function App, we can now proceed to grant it permissions to the resources it needs access to. This example will assign the managed identity as a Virtual Machine Contributor to the subscription so it can perform start and deallocate operations on all the virtual machines. Navigate to the subscription’s Access control (IAM) blade and click on Role assignments:

Proceed to select the Virtual Machine Contributor role:

Locate the Function App for the managed identity and save the permissions:

Step #3 – Configure the Function App

It’s currently September 27, 2023 as I write this post and I noticed that the Function App page layout and blades have changed. The Functions blade under Functions option no longer appears to exist so create the application by selecting Overview, under the Functions tab, click on Create in Azure Portal:

The type of function we’ll be creating will be the Timer Trigger and the Schedule will be configured as the following CRON expression:

0 0 * * * 0-6

The above CRON expression allows the function to run at every hour on every day, every month, Sunday to Saturday (every day, every hour).

Once the Function is created, proceed to click on Code + Test:

The code for this the Function can be copied from my GitHub repo at the following URL: https://github.com/terenceluk/Azure/blob/main/Function%20App/Start-Stop-VM-Function-Based-On-Tags.ps1

Make sure you update the subscriptions list and the timezone you want this script to use for the Tags:

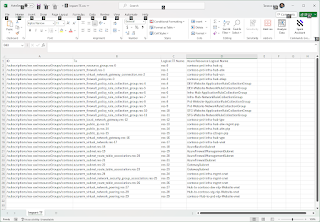

Save the code and navigate back out to the Function App, select App Files, then select the requirements.psd1 in the heading to load the file. Note that the default template of this file has everything commented out. We can simply remove the hash character in front of 'Az' = '10.* ' to load all Az modules but I’ve had terrible luck in doing so as the process of downloading the files would cause the Function App to timeout. What I like to do is indicate exactly what modules I need and specify them.

The following are the modules my PowerShell script uses so proceed to copy and paste module requirements into the requirements.psd1 file:

'Az.Accounts' = '2.*'

'Az.Compute' = '2.*'

'Az.ResourceGraph' = '0.*'

Save the file and then switch to the host.json file:

As specified in the following Microsoft documentation: https://learn.microsoft.com/en-us/azure/azure-functions/functions-host-json#functiontimeout, , we can increase the default timeout of a consumption based Function App by adding the following attribute and value into the file:

{

"functionTimeout": "00:10:00"

}

Proceed to add the value to handle large environments that may cause the Function App to exceed the 5-minute limit:

Save the file and navigate back to the Function, Code + Test blade, and proceed to test the Function:

The execution should return a 202 Accepted HTTP response code and the virtual machines should now be powered on and off at the scheduled time:

I hope this blog post helps anyone who might be looking for a script that can handle weekend scheduling of VM start and stop operations.