One of the more common questions I’m asked every year is how to patch an ESXi host without using VMware Update Manager because it is quite common for ESXi hosts as well as vCenter to not have internet access. What I typically do when asked is point them to one of my previous posts where I demonstrated the process for a Nutanix infrastructure:

Upgrading Nutanix blades from ESXi 5.1 to 5.5

http://terenceluk.blogspot.com/2014/12/upgrading-nutanix-blades-from-esxi-51.html

… because the post includes the command for non-Nutanix deployments at the end but since I’ve received follow up questions about the process, I thought it would be a good idea to write a post that specifically demonstrates this for any ESXi deployments.

Step #1 – Identify and download desired ESXi build level

Begin by identifying the which build you’d like to patch the ESXi to:

https://kb.vmware.com/s/article/2143832

With the build number identified, proceed to the following URL to locate and download the patch:

https://my.vmware.com/group/vmware/patch#search

Download the patch:

The package you download should be a ZIP file containing files similar to the following screenshot:

vib20

index.xml

metadata.zip

vendor-index.xml

Step #2 – Upload patch to datastore

Upload the package to a datastore that the host you’re patching has access to by using a utility such as WinSCP:

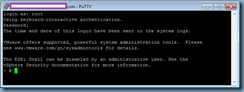

Step #3 – Patch ESXi host via CLI

Proceed to either access the console directly on the server or SSH to it.

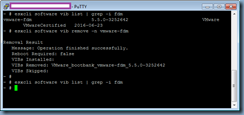

The command we’ll be using to install the patch will look as such:

esxcli software vib install -d <full path to patch file>

Note that it is important to specify the full path to the patch file because if you don’t then the follow error will be thrown:

/vmfs/volumes/58da7103-7810a474-5615-000eb6b4b6ea # esxcli software vib install

-d ESXi550-201612001.zip

[MetadataDownloadError]

Could not download from depot at zip:/var/log/vmware/ESXi550-201612001.zip?index.xml, skipping (('zip:/var/log/vmware/ESXi550-201612001.zip?index.xml', '', "Error extracting index.xml from /var/log/vmware/ESXi550-201612001.zip: [Errno 2] No such file or directory: '/var/log/vmware/ESXi550-201612001.zip'"))

url = zip:/var/log/vmware/ESXi550-201612001.zip?index.xml

Please refer to the log file for more details.

/vmfs/volumes/58da7103-7810a474-5615-000eb6b4b6ea #

Forgetting to include the -d will throw the following error:

/vmfs/volumes/58da7103-7810a474-5615-000eb6b4b6ea # esxcli software vib install

/vmfs/volumes/rvbd_vsp_datastore/ESXi550-201612001.zip

Error: Unknown command or namespace software vib install /vmfs/volumes/rvbd_vsp_datastore/ESXi550-201612001.zip

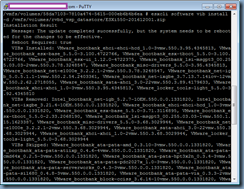

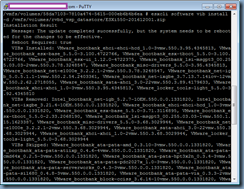

The patch will successfully install if the full path is specified and the output would look as such:

/vmfs/volumes/58da7103-7810a474-5615-000eb6b4b6ea # esxcli software vib install

-d /vmfs/volumes/rvbd_vsp_datastore/ESXi550-201612001.zip

Installation Result

Message: The update completed successfully, but the system needs to be rebooted for the changes to be effective.

Reboot Required: true

VIBs Installed: VMware_bootbank_ehci-ehci-hcd_1.0-3vmw.550.3.95.4345813, VMware_bootbank_esx-base_5.5.0-3.100.4722766, VMware_bootbank_esx-tboot_5.5.0-3.100.4722766, VMware_bootbank_esx-ui_1.12.0-4722375, VMware_bootbank_lsi-msgpt3_00.255.03.03-2vmw.550.3.78.3248547, VMware_bootbank_misc-drivers_5.5.0-3.95.4345813, VMware_bootbank_net-e1000e_3.2.2.1-2vmw.550.3.78.3248547, VMware_bootbank_net-igb_5.0.5.1.1-1vmw.550.2.54.2403361, VMware_bootbank_net-ixgbe_3.7.13.7.14iov-12vmw.550.2.62.2718055, VMware_bootbank_sata-ahci_3.0-22vmw.550.3.89.4179633, VMware_bootbank_xhci-xhci_1.0-3vmw.550.3.95.4345813, VMware_locker_tools-light_5.5.0-3.92.4345810

VIBs Removed: Intel_bootbank_net-igb_5.2.7-1OEM.550.0.0.1331820, Intel_bootbank_net-ixgbe_3.21.4-1OEM.550.0.0.1331820, VMware_bootbank_ehci-ehci-hcd_1.0-3vmw.550.0.0.1331820, VMware_bootbank_esx-base_5.5.0-3.71.3116895, VMware_bootbank_esx-tboot_5.5.0-2.33.2068190, VMware_bootbank_lsi-msgpt3_00.255.03.03-1vmw.550.1.15.1623387, VMware_bootbank_misc-drivers_5.5.0-3.68.3029944, VMware_bootbank_net-e1000e_3.2.2.1-2vmw.550.3.68.3029944, VMware_bootbank_sata-ahci_3.0-22vmw.550.3.68.3029944, VMware_bootbank_xhci-xhci_1.0-2vmw.550.3.68.3029944, VMware_locker_tools-light_5.5.0-3.68.3029944

VIBs Skipped: VMware_bootbank_ata-pata-amd_0.3.10-3vmw.550.0.0.1331820, VMware_bootbank_ata-pata-atiixp_0.4.6-4vmw.550.0.0.1331820, VMware_bootbank_ata-pata-cmd64x_0.2.5-3vmw.550.0.0.1331820, VMware_bootbank_ata-pata-hpt3x2n_0.3.4-3vmw.550.0.0.1331820, VMware_bootbank_ata-pata-pdc2027x_1.0-3vmw.550.0.0.1331820, VMware_bootbank_ata-pata-serverworks_0.4.3-3vmw.550.0.0.1331820, VMware_bootbank_ata-pata-sil680_0.4.8-3vmw.550.0.0.1331820, VMware_bootbank_ata-pata-via_0.3.3-2vmw.550.0.0.1331820, VMware_bootbank_block-cciss_3.6.14-10vmw.550.0.0.1331820, VMware_bootbank_elxnet_10.2.309.6v-1vmw.550.3.68.3029944, VMware_bootbank_esx-dvfilter-generic-fastpath_5.5.0-0.0.1331820, VMware_bootbank_esx-xlibs_5.5.0-0.0.1331820, VMware_bootbank_esx-xserver_5.5.0-0.0.1331820, VMware_bootbank_ima-qla4xxx_2.01.31-1vmw.550.0.0.1331820, VMware_bootbank_ipmi-ipmi-devintf_39.1-4vmw.550.0.0.1331820, VMware_bootbank_ipmi-ipmi-msghandler_39.1-4vmw.550.0.0.1331820, VMware_bootbank_ipmi-ipmi-si-drv_39.1-4vmw.550.0.0.1331820, VMware_bootbank_lpfc_10.0.100.1-1vmw.550.0.0.1331820, VMware_bootbank_lsi-mr3_0.255.03.01-2vmw.550.3.68.3029944, VMware_bootbank_misc-cnic-register_1.72.1.v50.1i-1vmw.550.0.0.1331820, VMware_bootbank_mtip32xx-native_3.3.4-1vmw.550.1.15.1623387, VMware_bootbank_net-be2net_4.6.100.0v-1vmw.550.0.0.1331820, VMware_bootbank_net-bnx2_2.2.3d.v55.2-1vmw.550.0.0.1331820, VMware_bootbank_net-bnx2x_1.72.56.v55.2-1vmw.550.0.0.1331820, VMware_bootbank_net-cnic_1.72.52.v55.1-1vmw.550.0.0.1331820, VMware_bootbank_net-e1000_8.0.3.1-3vmw.550.0.0.1331820, VMware_bootbank_net-enic_1.4.2.15a-1vmw.550.0.0.1331820, VMware_bootbank_net-forcedeth_0.61-2vmw.550.0.0.1331820, VMware_bootbank_net-mlx4-core_1.9.7.0-1vmw.550.0.0.1331820, VMware_bootbank_net-mlx4-en_1.9.7.0-1vmw.550.0.0.1331820, VMware_bootbank_net-nx-nic_5.0.621-1vmw.550.0.0.1331820, VMware_bootbank_net-tg3_3.123c.v55.5-1vmw.550.2.33.2068190, VMware_bootbank_net-vmxnet3_1.1.3.0-3vmw.550.2.39.2143827, VMware_bootbank_ohci-usb-ohci_1.0-3vmw.550.0.0.1331820, VMware_bootbank_qlnativefc_1.0.12.0-1vmw.550.0.0.1331820, VMware_bootbank_rste_2.0.2.0088-4vmw.550.1.15.1623387, VMware_bootbank_sata-ata-piix_2.12-10vmw.550.2.33.2068190, VMware_bootbank_sata-sata-nv_3.5-4vmw.550.0.0.1331820, VMware_bootbank_sata-sata-promise_2.12-3vmw.550.0.0.1331820, VMware_bootbank_sata-sata-sil24_1.1-1vmw.550.0.0.1331820, VMware_bootbank_sata-sata-sil_2.3-4vmw.550.0.0.1331820, VMware_bootbank_sata-sata-svw_2.3-3vmw.550.0.0.1331820, VMware_bootbank_scsi-aacraid_1.1.5.1-9vmw.550.0.0.1331820, VMware_bootbank_scsi-adp94xx_1.0.8.12-6vmw.550.0.0.1331820, VMware_bootbank_scsi-aic79xx_3.1-5vmw.550.0.0.1331820, VMware_bootbank_scsi-bnx2fc_1.72.53.v55.1-1vmw.550.0.0.1331820, VMware_bootbank_scsi-bnx2i_2.72.11.v55.4-1vmw.550.0.0.1331820, VMware_bootbank_scsi-fnic_1.5.0.4-1vmw.550.0.0.1331820, VMware_bootbank_scsi-hpsa_5.5.0-44vmw.550.0.0.1331820, VMware_bootbank_scsi-ips_7.12.05-4vmw.550.0.0.1331820, VMware_bootbank_scsi-lpfc820_8.2.3.1-129vmw.550.0.0.1331820, VMware_bootbank_scsi-megaraid-mbox_2.20.5.1-6vmw.550.0.0.1331820, VMware_bootbank_scsi-megaraid-sas_5.34-9vmw.550.3.68.3029944, VMware_bootbank_scsi-megaraid2_2.00.4-9vmw.550.0.0.1331820, VMware_bootbank_scsi-mpt2sas_14.00.00.00-3vmw.550.3.68.3029944, VMware_bootbank_scsi-mptsas_4.23.01.00-9vmw.550.3.68.3029944, VMware_bootbank_scsi-mptspi_4.23.01.00-9vmw.550.3.68.3029944, VMware_bootbank_scsi-qla2xxx_902.k1.1-12vmw.550.3.68.3029944, VMware_bootbank_scsi-qla4xxx_5.01.03.2-6vmw.550.0.0.1331820, VMware_bootbank_uhci-usb-uhci_1.0-3vmw.550.0.0.1331820

/vmfs/volumes/58da7103-7810a474-5615-000eb6b4b6ea #

Proceed to vMotion the VMs on this host to another host or shut them down if they don’t need to be up and restart the host:

The new build number should be displayed once the host has successfully restarted: